Project Plan

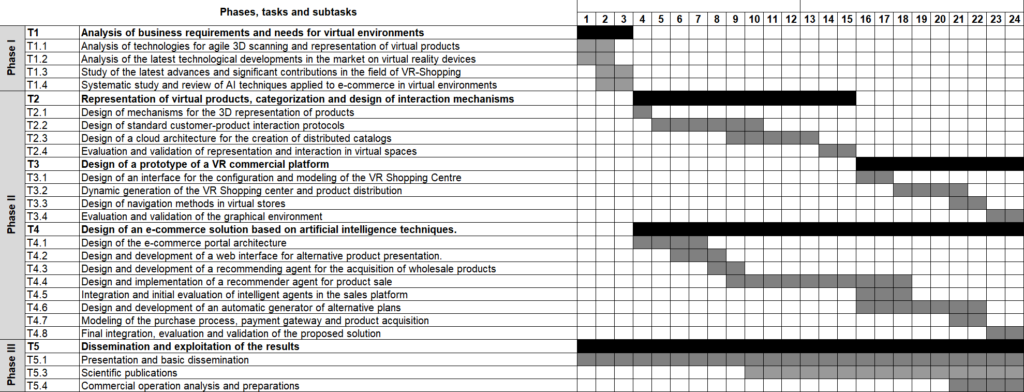

The following is a work and research plan for the next two years, developed within the research group AIR

Planning for the Next Two Years

2023-2025

Current research situation

In the timeline showed above, some of the objectives have been met and we are currently working towards others. In the first phase, all the objectives have been met, which has allowed us to lay the foundation of the previously shown architecture, as well as to carry out a mapping of the state of the art of VR Shopping and AI techniques used in e-commerce in virtual environments. These results have served as a basis to develop a small scenario in which to test the functions of the SDK with which I will work initially and to present the background from which we start in a recent work that we carried out. Regarding the subtask T4.1, the initial version of the portal architecture was presented in such work.

In addition, we have analyzed the different virtual reality devices, focusing on VR headsets that can operate autonomously while offering powerful hardware, as this has been the market trend in recent years. The result of this analysis allowed us to choose the Oculus Meta Quest 2 as the device we will use to support our research, due to its technical characteristics, integration with development environments, and popularity in the market. However, I have considered the possibility of extending our research with other headsets that will be marketed in the next few years.

Current work is focused on digitization of products in virtual environments at a low cost and high-quality. This line of work is related to the Product Digitisation module of the architectur, as we want to explore different scanning technologies that allow small businesses to generate high quality 3D models of their products without having to invest large amounts of money in professional 3D scanners. Currently, I am researching scanning solutions that can be used with mobile devices like Polycam, based on photogrammetry, or Luma AI (https://lumalabs.ai), which is based on an emerging AI technology called Neural Radiance Field (NeRF).

To this end, we have scanned a set of objects of different materials and sizes to obtain a heterogeneous sample. To examine the quality of the generated 3D models, we are collecting a series of metrics such as polygonal density, number of vertices and polygons of the model or the isolation of the environment achieved by the scanning tools. In addition, we are comparing the point clouds of the generated models with respect the highest-quality one to check their accuracy.

We are also researching the design of customer-product interactions. The aim is to design interactions that allow the user to understand how a product works before buying it, for example, by following an assembly manual and to experiment with it. In addition to being able to inspect the product in detail, these interactions will be used by the recommendation system.

Furthermore, we are also designing the RS for product sales. On the one hand, part of the design is focused on evaluating different existing techniques for the design of RS such as collaborative filtering or content-based filtering. We are working with a handcrafted dataset with products divided by categories. Using fuzzy logic and memory-based collaborative filtering, the aim is to provide recommendations that do not strictly belong to a single category. On the other hand, we are investigating the connection that must exist between the RS and the virtual environment, as the recommendations will change the displayed products and the environment must facilitate these changes. Furthermore, we are exploring options to include a virtual assistant and thus investigate how the use of these agents facilitates navigation and user experience in a VR Shopping context.

Finally, we are conducting experimentation sessions and case studies with the Hospital Nacional de Parapléjicos de Toledo (HNPT) in order to address a research line regarding accesibility and inclusivity options in VR environments. Moreover, these adaptions and accesibility options can be applied to shopping environment in VR.